Today we are excited to announce that Diagrid Catalyst is now in public beta. 🎉 You can access the Catalyst APIs and infrastructure services by signing up for free and then join our demo webinar on October 3rd.

Building distributed systems today is a mess of fragmented single purpose frameworks and libraries, all which require domain specific expertise. The idea with Catalyst is to give developers APIs for building microservice-based applications, covering everything from messaging to data and workflow. It’s built on top of the popular open source Dapr project, which is used by teams at thousands of leading companies like Grafana Labs, HDFC Bank, Nvidia, NASA, Prudential and many more.

Catalyst is completely serverless, requires no management whatsoever, and plays nice with your existing infrastructure.

"Compared with building the same app using various stacks, Catalyst just gave me an easier way to do it: minimal moving parts, isolation and decoupling which I adore" - Rosius Ndimofor Ateh - AWS Serverless Hero

What does Catalyst do for you?

Since we announced Catalyst, we've continued to hear about the complexity developers face managing libraries, frameworks, and cloud services. Add infrastructure lock-in, boilerplate code, and security vulnerabilities, and developers are overwhelmed by this complexity and with decisions that have long-term consequences.

The Catalyst APIs lower the cost of building microservices, with the flexibility and choice of changing backing infrastructure services, while enabling faster time to market. Over the past 10 months in private preview, Catalyst has shown to remove thousands of lines of boilerplate code, provide proven software patterns, reduced code for cloud infrastructure by over 80%, and enabled seamless switching of infrastructure in hours. It delivers built-in security, resiliency, and observability without developers having to write encryption logic or instrumenting code.

Catalyst APIs can be used no matter where your code runs. You are not tied into any particular cloud platform or compute service. Whether you are migrating and modernizing an existing application to VMs, using Kubernetes to scale, or prefer a serverless container or function platform, they can all be used with the Catalyst APIs.

You only need three things. Your code, your infrastructure and Catalyst APIs.

.png)

What can you build?

Before diving into how to get started with Catalyst, let’s talk about how to use the APIs looking at this through the lens of using Catalyst with AWS. Let’s start with identity and synchronous connectivity.

Catalyst gives every application an identity or appId. It's a unique name within the scope of a Catalyst project you create. Identity is essential since now you can not only perform secure actions (only app A is allowed to call app B) but you can use this name to span code deployments that use Catalyst. In this regard, you can consider Catalyst as a network overlay across any compute where any code (or running process) is discoverable and addressable.

Want to synchronously call a Lambda function from an AWS EC2 instance? Or call an application running in a particular pod across two AWS EKS clusters in different regions? No problem, Catalyst can do this, all you need is the appId using the service invocation API. This secure, resilient connectivity at the application level, opens up endless possibilities in connecting legacy applications to new ones, applications that use specialized hardware or ones tied to particular compute services.

You run your application code where it wants to be. Catalyst does all the heavy lifting including end-to-end security and resiliency (retries, circuit breakers, timeouts) with its service invocation API.

Let’s look at some code using the Catalyst service invocation API. Here AppA running on an EC2 instance wants to call the neworder method on AppB over HTTP, which is a Lambda function.

First you create appIds for your code with a couple of CLI commands which also create the necessary API authentication tokens and Catalyst endpoints.

> diagrid appid create appA

> diagrid appid create appB

Then to call Lambda neworder function you simply have to use the appId and authentication token (apiToken) for AppB in an standard HTTP call, like this;

const order = {orderId: 1};

const url = `${catalystEndpoint}/neworder`

const response = await fetch(url, {

method: "POST",

headers: {

"dapr-app-id": "appB",

"dapr-api-token": "apiToken",

},

body: JSON.stringify(order),

}

);

Catalyst knows the appId for the Lambda function, where it is running and routes the call to the neworder function. This is the code in the function.

app.post(‘/neworder', (req, res) => {

console.log(“message received %s”, JSON.stringify(req.body));

res.status(200).send();

})

Simple. With this you get end-to-end security, retries to handle network failures and calling tracing . All built-in.

Asynchronicity. What are you interested in being notified about?

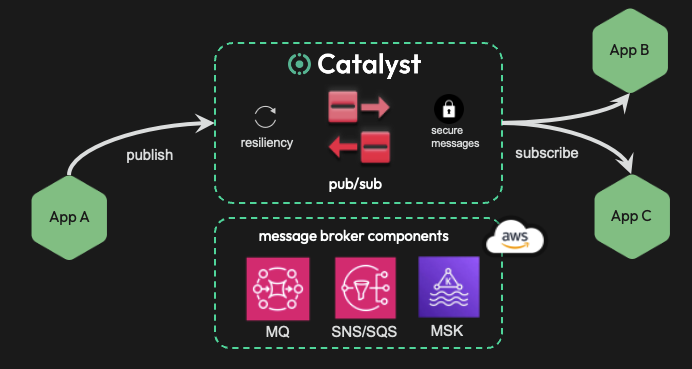

Pub/sub with asynchronous messaging is central to today's event driven applications. However developers are required to choose and learn message broker specific SDKs and then bake this into their code. This is time consuming and ties the application to a specific choice of infrastructure.

Catalyst avoids both of these issues. With Catalyst, all the best practices for using an infrastructure SDK are built into components and these are swappable. You get all the benefits of any particular choice of message broker, however your application code is abstracted through the pub/sub API. For example you may have chosen to use AWS SNS/SQS service and then later decided to replace this with the AWS MQ service. This change can be done in hours with no code impact, just an infrastructure component swap.

Less code to maintain and complete flexibility.

Let’s look at how you use the pub/sub API using the Dapr javascript SDK. Having provisioned your AWS message broker and configured the Catalyst component (in this case named ‘pubsub-broker'), to publish an order to the ‘new-order' topic use a client with your Catalyst endpoint and call the publish API. This is your code in AppA;

const client = new DaprClient({ catalystEndpoint });

const order = { orderId: 1 };

await client.pubsub.publish(‘pubsub-broker', ‘new-order', order);

To subscribe to message topics you can either do this declaratively in YAML or with a CLI command. The following CLI command creates a subscription called order-subscription for AppB for a ‘new-order' topic where messages are routed to the /neworder method.

> diagrid subscription create order-subscription \

--component pubsub-broker \

--topic new-order \

--route /neworder \

--scopes appB

Now we just need code to consume the messages. This is your code in AppB.

app.post(‘/neworder', (req, res) => {

console.log(“message received %s”, JSON.stringify(req.body));

res.status(200).send();

})

That’s it. Any number of applications can subscribe to the new-order topics and receive messages. What's more the Catalyst publish API has many capabilities including secure messages, bulk messaging, automatic creation of consumer groups and many more features, preventing you from writing tedious boilerplate code.

Statefulness. What’s in my shopping cart?

The Catalyst state API stores key-value (KV) data to create stateful, long running microservices. Similar to pub/sub API you use components to configure your choice of backing database without coupling your code to infrastructure, for example storing state into AWS DynamoDB or AWS Aurora. KV storage is familiar to all developers.

However, as well as storing KV data, the Catalyst state API takes this further. Firstly you can use the state API to securely multi-tenant your database across apps saving you money. Second as a developer you frequently need to signal applications about an upsert operation with a message, and you want this to be transactional; the outbox pattern. With Catalyst you can do this by simply choosing a message broker to pair with your choice of state store indicating these work together as the transactional outbox pattern. A hard, distributed system pattern solved with a few setup choices on your state component.

Your database, your message broker, your code. The state API does the hard work.

.png)

Using the state API for KV data is as straightforward as configuring a component with your database of choice (here named ‘statestore') and then calling save. Let’s use the Dapr javascript SDK again.

const client = new DaprClient({ catalystEndpoint });

const order = { orderId: 1 }

const state = [{ key: order.orderId, value: order }];

await client.state.save(‘statestore’, state);

And to retrieve the order call the get method with the key.

const savedOrder = await client.state.get(‘statestore’, order.orderId);

Durable state machines. Solving the hard problem of unreliable code

Workflows in Catalyst let you run a set of activities using different patterns like sequential, fan-out/in, external triggers, monitors, etc. They’re code based, so you can debug them like any other code, and they’re lightweight and scalable. And like other Catalyst APIs, you can choose your own database to store workflow state (and easily swap it).

Workflows greatly simplify coordinating work across many microservices whether that is by sending events or calling methods. The majority of workflow applications communicate with other, none of them are an island, where activities send messages or call other services. Workflow activities can use the Catalyst pub/sub and service invocation APIs with just a few lines of code putting you on a golden path to solving the hard problem of unreliable code and communication together.

.png)

To use the workflow API, you write and register a set of activities for your business code and then schedule a new workflow instance. Here is an example of C# code using the SDK to create an OrderProcessingWorkflow.

var builder = WebApplication.CreateBuilder(args);

// Add Dapr workflow services

builder.Services.AddDaprWorkflow(options =>{

options.RegisterWorkflow<OrderProcessingWorkflow>();

options.RegisterActivity<NotifyActivity>();

options.RegisterActivity<ReserveInventoryActivity>();

options.RegisterActivity<ProcessPaymentActivity>();

options.RegisterActivity<UpdateInventoryActivity>();

});

// Schedule a new workflow instance

app.MapPost("/workflow/start", async (OrderPayload order) =>{

var guid = Guid.NewGuid();

await workflowClient.ScheduleNewWorkflowAsync(

name: nameof(OrderProcessingWorkflow),

input: order,

instanceId: guid.ToString());

return Results.Ok(new { WorkflowId = guid });

});

The Catalyst workflow API is versatile and resilient enabling you to coordinate event-driven applications, run state machines, perform batch processing, use a saga pattern to ensure safe and consistent state across distributed microservices and triggers from external systems.

Simplified serverless Infrastructure.

Catalyst includes both serverless pub/sub and key-value store services. This serverless infrastructure eliminates painful operational maintenance without sacrificing security and performance, while automatically scaling with your usage regardless of where your code is running. When you create a project in Catalyst you can choose to also create these infrastructure services. We make infrastructure scaleable and easy to use for developers

.png)

To create a Diagrid KV store or Diagrid pub/sub, you can use the CLI:

> diagrid kv create mykvstore

> diagrid pubsub create mypubsub

Built on open source

In 2019 we created and open-sourced the CNCF Dapr project. Today it’s used by thousands of large enterprises like HDFC Bank, NASA, Bosch, Zeiss, Rakuten IBM, Dell, PWC, Tesla and more to power their mission critical services. Our 2023 State of Dapr report showed that the majority of developers using Dapr ship their projects 30% faster rather than hacking together frameworks and SDKs themselves. With Catalyst your savings are even higher due to zero management cost of managing Dapr sidecars and with the increased flexibility of running you code anywhere.

"Catalyst APIs stand out among other API-driven solutions; backed by the Dapr CNCF project, software engineers can rely on it without worrying about the heavy lifting core logic" - Sardor - software engineering manager, Epam Systems

Pricing

It's completely free. There are limits on usage which you can find out more details on here and if you want to go beyond these, reach out to us.

Inspire me!

Want to see Catalyst in action? Join our interactive demo webinar on October 3rd.

Last month we announced the winners of our Catalyst AWS hackathon which had many incredible and innovative submissions. Eventually we narrowed it down to the top three which you can check out on Github. You can see these winning projects at the Catalyst demo webinar.

Try it out

It's easy to get started. You can sign up at http://catalyst.diagrid.io, create a project and dive into a quickstart in your language of choice.

In a nutshell there are five simple commands:

> diagrid login

> diagrid project quickstart --type pubsub --language javascript --name mytestapp

> diagrid dev start

> curl -i -X POST http://localhost:5001/order \

-H "Content-Type: application/json" \

-d '{"orderId":1}'

> diagrid dev stop

You can also browse and experience the APIs with the Catalyst portal. Use the interactive getting started guide below👇 or simply dive into the getting started documentation https://docs.diagrid.io/catalyst/

It’s an incredible time to be a developer in the modern era of microservices and cloud native app development, and we are excited to now get Catalyst into everyone's hands. Join the Catalyst community to ask questions or reach out to us directly, we would be thrilled to hear from you 👋.

%20(1).png)