This post highlights the major new features and changes for the APIs and SDKs in release v1.15. We celebrated the planned v1.15 release with a webinar where contributors demonstrated the new features which you can watch here:

APIs & SDKs

Conversation API (alpha)

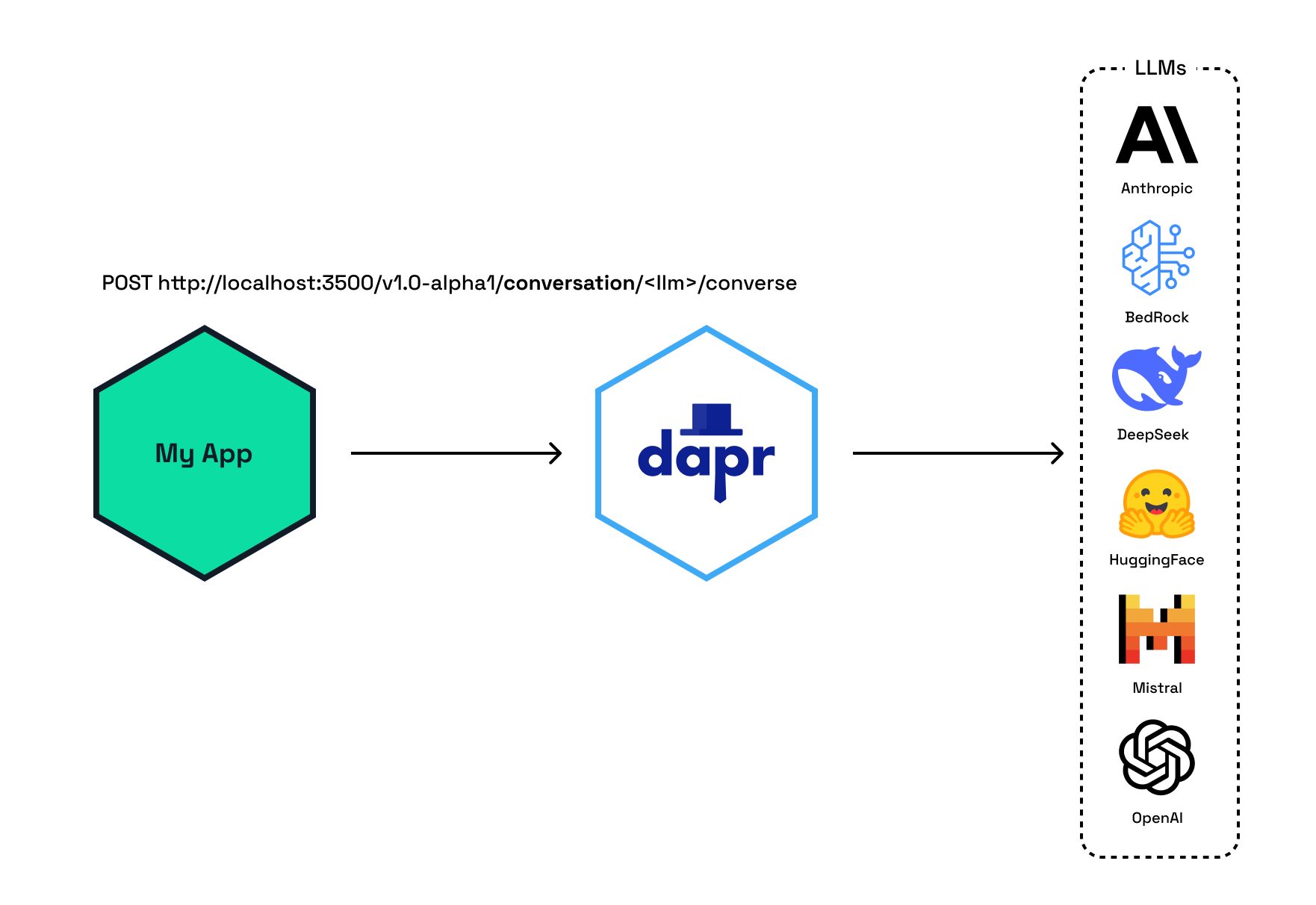

Let’s start with Dapr's new Conversation API that provides a straightforward, secure, and reliable way to include Large Language Models (LLM) prompts into Dapr applications. This API gives you a consistent way to talk to LLM providers, via Dapr LLM components, without having to install the native SDK for each LLM. The Dapr component model enables developers to add new LLM components easily.

The Conversation API comes with built-in prompt caching and PII obfuscation.

- Performance is optimized by storing and reusing prompts that are often repeated across multiple API calls. Frequent prompts are stored in a local cache to be reused by your cluster, pod, or other, instead of reprocessing the information for every new request.

- The PII obfuscation feature identifies and removes any form of sensitive user information from a conversation response. PII obfuscation can be enabled on both input and output data to safeguard your privacy and remove sensitive info that could be used to identify a person. For more information see the Dapr docs.

Watch the announcement and demo during the Dapr 1.15 release celebration:

The Dapr Conversation API can be used with the following LLM components: Anthropic, AWS Bedrock, DeepSeek, Huggingface, Mistral and OpenAI. There is also an echo component that can be used for testing purposes, this simply echoes back the request.

OpenAI component sample

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: openai

spec:

type: conversation.openai

metadata:

- name: key

value: <REPLACE_WITH_YOUR_KEY>

- name: model

value: gpt-4-turbo

- name: cacheTTL

value: 10m

Echo component sample

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: echo

spec:

type: conversation.echo

version: v1

The Conversion API has support in the following Dapr SDKs: .NET, Python, Go, and Rust. You can use the Conversation API without SDKs by sending a POST request to the conversation endpoint:

POST http://localhost:<daprPort>/v1.0-alpha1/conversation/<llm-name>/converse

Example HTTP request to call Anthropic:

POST http://localhost:3500/v1.0-alpha1/conversation/anthropic/converse?metadata.key=key1&metadata.model=claude-3-5-sonnet-20240620&metadata.cacheTTL=10m

Here’s a Python sample that uses the Conversation HTTP API:

import logging

import requests

import os

logging.basicConfig(level=logging.INFO)

base_url = os.getenv('BASE_URL', 'http://localhost') + ':' + os.getenv(

'DAPR_HTTP_PORT', '3500')

CONVERSATION_COMPONENT_NAME = 'echo'

input = {

'name': 'echo',

'inputs': [{'content':'What is dapr?'}],

'parameters': {},

'metadata': {}

}

# Send input to conversation endpoint

result = requests.post(

url='%s/v1.0-alpha1/conversation/%s/converse' % (base_url, CONVERSATION_COMPONENT_NAME),

json=input

)

logging.info('Input sent: What is dapr?')

# Parse conversation output

data = result.json()

output = data["outputs"][0]["result"]

logging.info('Output response: ' + output)Source: https://github.com/dapr/quickstarts/blob/release-1.15/conversation/python/http/conversation/app.py

More information about the Conversation API can be found in the docs.

Dapr Workflow (stable)

.png)

Dapr Workflow enables developers to write long-running and fault-tolerant applications based on durable execution. The priority of the Dapr maintainers in the Dapr 1.15 release was to move the Workflow API to the stable phase in the lifecycle and ensure it is performant for production workloads. The Workflow API was introduced in February 2023 with the 1.10 release and has been rigorously tested and improved in the past two years.

A major overhaul of the workflow engine has resulted in substantial enhancements in performance and scalability, as well as the dependability and stability essentials for running mission-critical apps on a massive scale. Workflow applications can now be scaled from 0 to many replicas, while maintaining durability of the tasks at runtime.

Watch the Dapr 1.15 Celebration video about Workflow:

Here’s a sample of a Dapr workflow authored in C#. This workflow defines a chain of activities and includes business logic to determine which of these activities are executed based on the order payload and the output of the VerifyInventoryActivity.

internal sealed partial class OrderProcessingWorkflow : Workflow<OrderPayload, OrderResult>

{

public override async Task<OrderResult> RunAsync(WorkflowContext context, OrderPayload order)

{

var logger = context.CreateReplaySafeLogger<OrderProcessingWorkflow>();

var orderId = context.InstanceId;

// Notify the user that an order has come through

await context.CallActivityAsync(nameof(NotifyActivity),

new Notification($"Received order {orderId} for {order.Quantity} {order.Name} at ${order.TotalCost}"));

LogOrderReceived(logger, orderId, order.Quantity, order.Name, order.TotalCost);

// Determine if there is enough of the item available for purchase by checking the inventory

var inventoryRequest = new InventoryRequest(RequestId: orderId, order.Name, order.Quantity);

var result = await context.CallActivityAsync<InventoryResult>(

nameof(VerifyInventoryActivity), inventoryRequest);

LogCheckInventory(logger, inventoryRequest);

// If there is insufficient inventory, fail and let the user know

if (!result.Success)

{

// End the workflow here since we don't have sufficient inventory

await context.CallActivityAsync(nameof(NotifyActivity),

new Notification($"Insufficient inventory for {order.Name}"));

LogInsufficientInventory(logger, order.Name);

return new OrderResult(Processed: false);

}

if (order.TotalCost > 5000)

{

await context.CallActivityAsync(nameof(RequestApprovalActivity),

new ApprovalRequest(orderId, order.Name, order.Quantity, order.TotalCost));

var approvalResponse = await context.WaitForExternalEventAsync<ApprovalResponse>(

eventName: "ApprovalEvent",

timeout: TimeSpan.FromSeconds(30));

if (!approvalResponse.IsApproved)

{

await context.CallActivityAsync(nameof(NotifyActivity),

new Notification($"Order {orderId} was not approved"));

LogOrderNotApproved(logger, orderId);

return new OrderResult(Processed: false);

}

}

// There is enough inventory available so the user can purchase the item(s). Process their payment

var processPaymentRequest = new PaymentRequest(RequestId: orderId, order.Name, order.Quantity, order.TotalCost);

await context.CallActivityAsync(nameof(ProcessPaymentActivity),processPaymentRequest);

LogPaymentProcessing(logger, processPaymentRequest);

try

{

// Update the available inventory

var paymentRequest = new PaymentRequest(RequestId: orderId, order.Name, order.Quantity, order.TotalCost);

await context.CallActivityAsync(nameof(UpdateInventoryActivity), paymentRequest);

LogInventoryUpdate(logger, paymentRequest);

}

catch (TaskFailedException)

{

// Let them know their payment was processed, but there's insufficient inventory, so they're getting a refund

await context.CallActivityAsync(nameof(NotifyActivity),

new Notification($"Order {orderId} Failed! You are now getting a refund"));

LogRefund(logger, orderId);

return new OrderResult(Processed: false);

}

// Let them know their payment was processed

await context.CallActivityAsync(nameof(NotifyActivity), new Notification($"Order {orderId} has completed!"));

LogSuccessfulOrder(logger, orderId);

// End the workflow with a success result

return new OrderResult(Processed: true);

}

...

}Try out Dapr Workflow with the Dapr Quickstarts, in .NET, Java, Python, JavaScript, or Go.

Dapr Actors

Due to a massive overhaul on the Dapr Actor runtime engine, the reliability of handling Actors at scale has been significantly improved. Actor placement dissemination is now scoped to a single namespace. This means less churn during dissemination events for actors being used across namespaces. The performance benefits will be described in detail in a separate post. No changes have been made to the Actor API surface, so existing Actor implementations are not impacted.

In 1.15, Dapr has the concept of actor targets. Implemented targets include app (normal actor apps), as well as the workflow (orchestration) and activity actor targets. This target abstraction resulted in a consistent single actor engine implementation compared to different actor implementations in previous Dapr versions. Actor targets allow the contributors to easily add additional targets in future Dapr releases, for instance, an agentic AI target. Targets are the extensibility point to introduce the actor runtime/framework to new domains.

The Actor runtime has also been abstracted to allow the addition of new APIs, such as an Actor Pub/Sub API that will be coming to Dapr 1.16.

Actor reminders, a feature to schedule periodic work, now defaults to using the stable Scheduler service. When upgrading to 1.15, existing Actor reminders are migrated from the Placement service to the Scheduler service as a one-time operation for each actor type. You can prevent this migration by setting the SchedulerReminders flag to false in the application configuration file for the actor type. See Scheduler Actor Reminders in the Dapr Docs.

.NET SDK Updates

The .NET SDK now supports the Jobs API, the Conversation API, and streaming Pub/Sub.

Jobs API

Many applications require job scheduling, or the need to take an action in the future. The Jobs API is an orchestrator for scheduling these future jobs, either at a specific time or for a specific interval.

Example scenarios include:

- Schedule batch processing jobs to run every business day

- Schedule various maintenance scripts to perform clean-ups

- Schedule ETL jobs to run at specific times (hourly, daily) to fetch new data, process it, and update the data warehouse with the latest information.

Here’s a sample of a .NET application that schedules and executes a backup job.

Models

//Define the types that we'll represent the job data with

internal sealed record BackupJobData([property: JsonPropertyName("task")] string Task, [property: JsonPropertyName("metadata")] BackupMetadata Metadata);

internal sealed record BackupMetadata([property: JsonPropertyName("DBName")]string DatabaseName, [property: JsonPropertyName("BackupLocation")] string BackupLocation);

Program.cs

using System.Text;

using System.Text.Json;

using Dapr.Jobs;

using Dapr.Jobs.Extensions;

using Dapr.Jobs.Models;

using Dapr.Jobs.Models.Responses;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddDaprJobsClient();

var app = builder.Build();

//Registers an endpoint to receive and process triggered jobs

var cancellationTokenSource = new CancellationTokenSource(TimeSpan.FromSeconds(5));

app.MapDaprScheduledJobHandler((string jobName, DaprJobDetails jobDetails, ILogger logger, CancellationToken cancellationToken) => {

logger?.LogInformation("Received trigger invocation for job '{jobName}'", jobName);

switch (jobName)

{

case "prod-db-backup":

// Deserialize the job payload metadata

var jobData = JsonSerializer.Deserialize<BackupJobData>(jobDetails.Payload);

// Process the backup operation - we assume this is implemented elsewhere in your code

await BackupDatabaseAsync(jobData, cancellationToken);

break;

}

}, cancellationTokenSource.Token);

await app.RunAsync();

Job registration

//Create a scope so we can access the registered DaprJobsClient

await using scope = app.Services.CreateAsyncScope();

var daprJobsClient = scope.ServiceProvider.GetRequiredService<DaprJobsClient>();

//Create the payload we wish to present alongside our future job triggers

var jobData = new BackupJobData("db-backup", new BackupMetadata("my-prod-db", "/backup-dir"));

//Serialize our payload to UTF-8 bytes

var serializedJobData = JsonSerializer.SerializeToUtf8Bytes(jobData);

//Schedule our backup job to run every minute, but only repeat 10 times

await daprJobsClient.ScheduleJobAsync("prod-db-backup", DaprJobSchedule.FromDuration(TimeSpan.FromMinutes(1)),

serializedJobData, repeats: 10);Conversation API

The new Conversation API can be used with the Dapr .NET SDK. The sample below shows to use the Converse method using the DaprConversionClient.

using Dapr.AI.Conversation;

using Dapr.AI.Conversation.Extensions;

class Program

{

private const string ConversationComponentName = "echo";

static async Task Main(string[] args)

{

const string prompt = "What is dapr?";

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddDaprConversationClient();

var app = builder.Build();

//Instantiate Dapr Conversation Client

var conversationClient = app.Services.GetRequiredService<DaprConversationClient>();

try

{

// Send a request to the echo mock LLM component

var response = await conversationClient.ConverseAsync(ConversationComponentName, [new(prompt, DaprConversationRole.Generic)]);

Console.WriteLine("Input sent: " + prompt);

if (response != null)

{

Console.Write("Output response:");

foreach (var resp in response.Outputs)

{

Console.WriteLine($" {resp.Result}");

}

}

}

catch (Exception ex)

{

Console.WriteLine("Error: " + ex.Message);

}

}

}Streaming Pub/Sub

The .NET SDK now supports streaming Pub/Sub. By using this subscription type, the subscriptions are dynamic and can be added and removed at runtime.

using System.Text;

using Dapr.Messaging.PublishSubscribe;

using Dapr.Messaging.PublishSubscribe.Extensions;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddDaprPubSubClient();

var app = builder.Build();

//Process each message returned from the subscription

Task<TopicResponseAction> HandleMessageAsync(TopicMessage message, CancellationToken cancellationToken = default)

{

try

{

//Do something with the message

Console.WriteLine(Encoding.UTF8.GetString(message.Data.Span));

return Task.FromResult(TopicResponseAction.Success);

}

catch

{

return Task.FromResult(TopicResponseAction.Retry);

}

}

var messagingClient = app.Services.GetRequiredService<DaprPublishSubscribeClient>();

//Create a dynamic streaming subscription and subscribe with a timeout of 30 seconds and 10 seconds for message handling

var cancellationTokenSource = new CancellationTokenSource(TimeSpan.FromSeconds(30));

var subscription = await messagingClient.SubscribeAsync("pubsub", "myTopic",

new DaprSubscriptionOptions(new MessageHandlingPolicy(TimeSpan.FromSeconds(10), TopicResponseAction.Retry)),

HandleMessageAsync, cancellationTokenSource.Token);

await Task.Delay(TimeSpan.FromMinutes(1));

//When you're done with the subscription, simply dispose of it

await subscription.DisposeAsync();Python SDK Updates

The Python SDK now supports the new Conversation API to talk to LLMs, and the Workflow API has been updated to align with the other Dapr SDKs.

Conversation API

This example shows how to use the Python SDK to use the Conversation API:

from dapr.clients import DaprClient

from dapr.clients.grpc._request import ConversationInput

with DaprClient() as d:

inputs = [

ConversationInput(content="What's Dapr?", role='user', scrub_pii=True),

ConversationInput(content='Give a brief overview.', role='user', scrub_pii=True),

]

metadata = {

'model': 'foo',

'key': 'authKey',

'cacheTTL': '10m',

}

response = d.converse_alpha1(

name='echo', inputs=inputs, temperature=0.7, context_id='chat-123', metadata=metadata

)

for output in response.outputs:

print(f'Result: {output.result}')Souce: https://github.com/dapr/python-sdk/blob/release-1.15/examples/conversation/conversation.py

Workflow API

The DaprClient workflow functions were deprecated in favor of the DaprWorkflowClient to align with all other Dapr SDKs.

if __name__ == '__main__':

wfr.start()

sleep(10) # wait for workflow runtime to start

wf_client = wf.DaprWorkflowClient()

instance_id = wf_client.schedule_new_workflow(workflow=task_chain_workflow, input=42)

print(f'Workflow started. Instance ID: {instance_id}')

state = wf_client.wait_for_workflow_completion(instance_id)

print(f'Workflow completed! Status: {state.runtime_status}')

wfr.shutdown()Source: https://github.com/dapr/python-sdk/blob/main/examples/workflow/task_chaining.py

Streaming Pub/Sub

Python SDK now supports streaming Pub/Sub to dynamically add and remove subscriptions at runtime.

Subscriber

import argparse

import time

from dapr.clients import DaprClient

from dapr.clients.grpc.subscription import StreamInactiveError

from dapr.common.pubsub.subscription import StreamCancelledError

counter = 0

parser = argparse.ArgumentParser(description='Publish events to a Dapr pub/sub topic.')

parser.add_argument('--topic', type=str, required=True, help='The topic name to publish to.')

args = parser.parse_args()

topic_name = args.topic

dlq_topic_name = topic_name + '_DEAD'

def process_message(message):

global counter

counter += 1

# Process the message here

print(f'Processing message: {message.data()} from {message.topic()}...', flush=True)

return 'success'

def main():

with DaprClient() as client:

global counter

try:

subscription = client.subscribe(

pubsub_name='pubsub', topic=topic_name, dead_letter_topic=dlq_topic_name

)

except Exception as e:

print(f'Error occurred: {e}')

return

try:

for message in subscription:

if message is None:

print('No message received. The stream might have been cancelled.')

continue

try:

response_status = process_message(message)

if response_status == 'success':

subscription.respond_success(message)

elif response_status == 'retry':

subscription.respond_retry(message)

elif response_status == 'drop':

subscription.respond_drop(message)

if counter >= 5:

break

except StreamInactiveError:

print('Stream is inactive. Retrying...')

time.sleep(1)

continue

except StreamCancelledError:

print('Stream was cancelled')

break

except Exception as e:

print(f'Error occurred during message processing: {e}')

finally:

print('Closing subscription...')

subscription.close()

if __name__ == '__main__':

main()

Subscriber handler

import argparse

import time

from dapr.clients import DaprClient

from dapr.clients.grpc._response import TopicEventResponse

counter = 0

parser = argparse.ArgumentParser(description='Publish events to a Dapr pub/sub topic.')

parser.add_argument('--topic', type=str, required=True, help='The topic name to publish to.')

args = parser.parse_args()

topic_name = args.topic

dlq_topic_name = topic_name + '_DEAD'

def process_message(message):

# Process the message here

global counter

counter += 1

print(f'Processing message: {message.data()} from {message.topic()}...', flush=True)

return TopicEventResponse('success')

def main():

with DaprClient() as client:

# This will start a new thread that will listen for messages

# and process them in the `process_message` function

close_fn = client.subscribe_with_handler(

pubsub_name='pubsub',

topic=topic_name,

handler_fn=process_message,

dead_letter_topic=dlq_topic_name,

)

while counter < 5:

time.sleep(1)

print('Closing subscription...')

close_fn()

if __name__ == '__main__':

main()Source: https://github.com/dapr/python-sdk/tree/main/examples/pubsub-streaming

Actor Mock Testing

The Python SDK now supports creating mock actors to unit test actor methods and verify how they interact with the actor state.

import unittest

from demo_actor import DemoActor

from dapr.actor.runtime.mock_actor import create_mock_actor

class DemoActorTests(unittest.IsolatedAsyncioTestCase):

def test_create_actor(self):

mockactor = create_mock_actor(DemoActor, '1')

self.assertEqual(mockactor.id.id, '1')

async def test_get_data(self):

mockactor = create_mock_actor(DemoActor, '1')

self.assertFalse(mockactor._state_manager._mock_state) # type: ignore

val = await mockactor.get_my_data()

self.assertIsNone(val)

async def test_set_data(self):

mockactor = create_mock_actor(DemoActor, '1')

await mockactor.set_my_data({'state': 5})

val = await mockactor.get_my_data()

self.assertIs(val['state'], 5) # type: ignore

async def test_clear_data(self):

mockactor = create_mock_actor(DemoActor, '1')

await mockactor.set_my_data({'state': 5})

val = await mockactor.get_my_data()

self.assertIs(val['state'], 5) # type: ignore

await mockactor.clear_my_data()

val = await mockactor.get_my_data()

self.assertIsNone(val)

async def test_reminder(self):

mockactor = create_mock_actor(DemoActor, '1')

self.assertFalse(mockactor._state_manager._mock_reminders) # type: ignore

await mockactor.set_reminder(True)

self.assertTrue('demo_reminder' in mockactor._state_manager._mock_reminders) # type: ignore

await mockactor.set_reminder(False)

self.assertFalse(mockactor._state_manager._mock_reminders) # type: ignoreSource: https://github.com/dapr/python-sdk/blob/main/examples/demo_actor/demo_actor/test_demo_actor.py

For more info on Actor Mock Testing in Python see the docs.

Java SDK Updates

Many improvements have been made to the Java SDK to integrate Spring Boot with Dapr. These improvements include:

- DaprClient and DaprWorkflowClient bean injection using dapr-spring-boot-autoconfigure.

- Spring Data CrudRepository support using Dapr StateStore + Bindings using dapr-spring-data.

- Spring Messaging support using Dapr PubSub APIs using dapr-spring-messaging.

- Dapr Workflows support with automatic Workflows and Activities registration and support for bean injection using dapr-spring-workflows.

- Testcontainers integration providing DaprContainer and PlacementServiceContainer using testcontainers-dapr.

The individual dapr-spring-* packages are combined in the dapr-sping-boot-starter package to get up and running quickly with the Dapr APIs.

Here’s an example of how to use Spring Data CrudReposity with dapr-spring-data:

@RestController

@EnableDaprRepositories

public class OrdersRestController {

@Autowired

private OrderRepository repository;

@PostMapping("/orders")

public void storeOrder(@RequestBody Order order){

repository.save(order);

}

@GetMapping("/orders")

public Iterable<Order> getAll(){

return repository.findAll();

}

}

The dapr-spring-messaging package enables easy pub/sub messaging using the DaprMessagingTemplate:

@Autowired

private DaprMessagingTemplate<Order> messagingTemplate;

@PostMapping("/orders")

public void storeOrder(@RequestBody Order order){

repository.save(order);

messagingTemplate.send("topic", order);

}

The dapr-spring-workflows package brings Dapr Workflow integration for Spring Boot users. To work with Dapr Workflow you write workflows as code. The Dapr Spring Boot Starter makes your life easier by managing Workflows and WorkflowActivitys as Spring beans.

In order to enable the automatic bean discovery you can annotate your @SpringBootApplication with the @EnableDaprWorkflows annotation:

@SpringBootApplication

@EnableDaprWorkflows

public class MySpringBootApplication {}

By adding this annotation, all the WorkflowActivitys will be automatically managed by Spring and registered to the workflow engine.

This also allows Springs @Autowired mechanism to inject any bean that our workflow activity might need to implement its functionality, for example the @RestTemplate:

public class MyWorkflowActivity implements WorkflowActivity {

@Autowired

private RestTemplate restTemplate;

Read more about the Dapr Spring Boot integration in the Dapr Docs.

JavaScript SDK Updates

The JavaScript SDK has been updated to have feature parity on Dapr Workflow across the Dapr SDKs. The DaprWorkflowClient now supports suspending and resuming workflows. The DaprClient now supports setting a custom status on the workflow context.

import { DaprWorkflowClient } from "@dapr/dapr";

async function printWorkflowStatus(client: DaprWorkflowClient, instanceId: string) {

const workflow = await client.getWorkflowState(instanceId);

console.log(

`Workflow ${workflow.name()}, created at ${workflow.createdAt().toUTCString()}, has status ${

workflow.runtimeStatus()

}`,

);

}

async function start() {

const client = new DaprWorkflowClient();

// Start a new workflow instance

const instanceId = await client.scheduleNewWorkflow("OrderProcessingWorkflow", {

Name: "Paperclips",

TotalCost: 99.95,

Quantity: 4,

});

console.log(`Started workflow instance ${instanceId}`);

await printWorkflowStatus(client, instanceId);

// Suspend a workflow instance

await client.suspendWorkflow(instanceId);

console.log(`Paused workflow instance ${instanceId}`);

await printWorkflowStatus(client, instanceId);

// Resume a workflow instance

await client.resumeWorkflow(instanceId);

console.log(`Resumed workflow instance ${instanceId}`);

await printWorkflowStatus(client, instanceId);

// Wait 30 secs for the workflow to complete

await new Promise((resolve) => setTimeout(resolve, 30000));

await printWorkflowStatus(client, instanceId);

// Purge a workflow instance

await client.purgeWorkflow(instanceId);

console.log(`Purged workflow instance ${instanceId}`);

}

start().catch((e) => {

console.error(e);

process.exit(1);

});

Go SDK Updates

The Go SDK now has support for the Conversation API to make calls to Large Language Models. Here’s an example of how to use the Conversation API:

package main

import (

"context"

"fmt"

dapr "github.com/dapr/go-sdk/client"

"log"

)

func main() {

client, err := dapr.NewClient()

if err != nil {

panic(err)

}

input := dapr.ConversationInput{

Content: "hello world",

// Role: nil, // Optional

// ScrubPII: nil, // Optional

}

fmt.Printf("conversation input: %s\n", input.Message)

var conversationComponent = "echo"

request := dapr.NewConversationRequest(conversationComponent, []dapr.ConversationInput{input})

resp, err := client.ConverseAlpha1(context.Background(), request)

if err != nil {

log.Fatalf("err: %v", err)

}

fmt.Printf("conversation output: %s\n", resp.Outputs[0].Result)

}

The Go SDK has been updated to have feature parity on Dapr Workflow across the SDKs. For example, the Go SDK now includes retry policies for activities:

if err := ctx.CallActivity(FailActivity, workflow.ActivityRetryPolicy(workflow.RetryPolicy{

MaxAttempts: 3,

InitialRetryInterval: 100 * time.Millisecond,

BackoffCoefficient: 2,

MaxRetryInterval: 1 * time.Second,

})).Await(nil); err == nil {

return nil, fmt.Errorf("unexpected no error executing fail activity")

}Source: https://github.com/dapr/go-sdk/blob/main/examples/workflow/main.go

Rust SDK

Conversation API support is available in the Rust SDK and key progress is being made in other areas towards a refactor for beta/stable state.

use dapr::client::{ConversationInputBuilder, ConversationRequestBuilder};

use std::thread;

use std::time::Duration;

type DaprClient = dapr::Client<dapr::client::TonicClient>;

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Sleep to allow for the server to become available

thread::sleep(Duration::from_secs(5));

// Set the Dapr address

let address = "https://127.0.0.1".to_string();

let mut client = DaprClient::connect(address).await?;

let input = ConversationInputBuilder::new("Please write a witty haiku about the Dapr distributed programming framework at dapr.io").build();

let conversation_component = "echo";

let request =

ConversationRequestBuilder::new(conversation_component, vec![input.clone()]).build();

println!("conversation input: {:?}", input.message);

let response = client.converse_alpha1(request).await?;

println!("conversation output: {:?}", response.outputs[0].result);

Ok(())

}

For more information on using the Conversation API in Rust, read How-To: Converse with an LLM using the conversation API in the Dapr Docs.

Runtime

Scheduler Service (stable)

.png)

The Scheduler service that is responsible for scheduling tasks for the Jobs API, Actor reminders, and reminders for the Workflow API is now stable and can be used in production.

Watch the video where the Scheduler Service is introduced:

Kubernetes Access control

The following improvements have been made for running Dapr on Kubernetes:

- The Scheduler service RBAC ClusterRole has a new rule granting read-only access to namespaces resources allowing ["get", "list", "watch"] operations.

Recommendations have been added to the docs to configure the Placement Service in production to better handle actor failures.

Installing the 1.15 release

Locally with the Dapr CLI

When you’re using the Dapr CLI on your local dev machine, perform the following steps to upgrade to the new Dapr version:

1. Uninstall Dapr: dapr uninstall --all

2. Install Dapr: dapr init

To upgrade the Dapr CLI itself:

- Linux: wget -q https://raw.githubusercontent.com/dapr/cli/master/install/install.sh -O - | /bin/bash

- macOS: brew upgrade dapr-cli

- Windows: winget upgrade Dapr.CLI

Verify the installation by running dapr -v.

On Kubernetes with Diagrid Conductor

The easiest way to upgrade Dapr on your Kubernetes clusters is via Diagrid Conductor Free - the easiest way for Dapr developers to visualize, troubleshoot, and optimize their Dapr applications running on Kubernetes.

You can sign up for Conductor here, and try it for your Dapr apps on Kubernetes.

What's next?

This post is not a complete list of features and changes released in version 1.15. Read the official Dapr release notes for more information including a list of all the new features, fixes, and breaking changes.

Are you new to Dapr? Try out the free hand-on Dapr 101 course at Dapr University. Want to talk about the new features with other Dapr developers? Join the Dapr Discord to connect with thousands of Dapr users.